CS224W-Machine Learning with Graph-GNNs and Algorithmic Reasoning

GNNs and Algorithmic Reasoning

- century saw unprecedented development of algorithms

- Sorting, shortest paths, graph search, routing

- Algorithmic paradigms such as greedy, divide-and-conquer, parallelism, recursion, deterministic vs non-deterministic

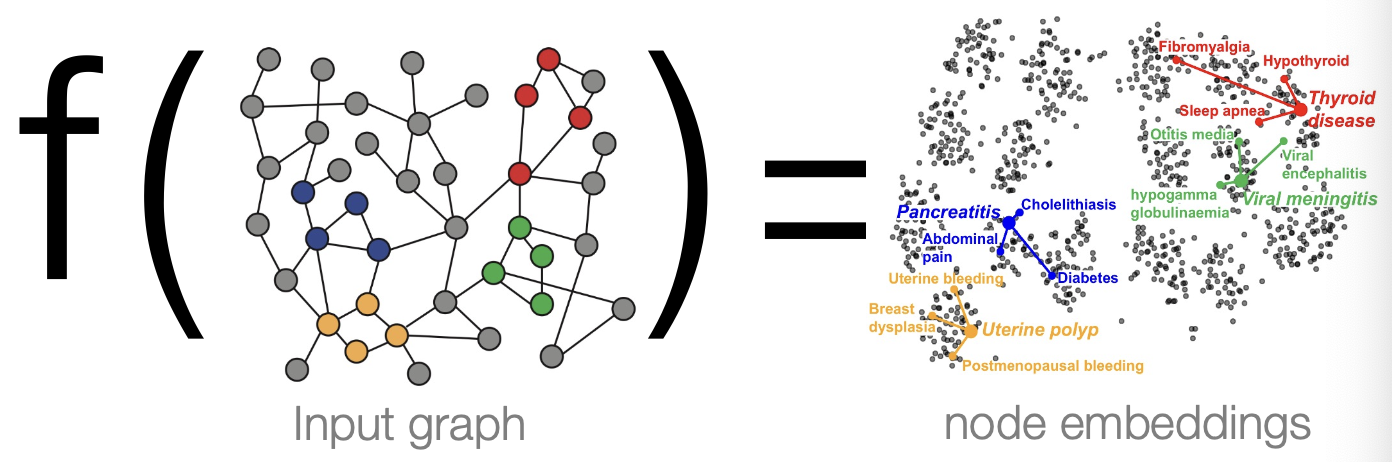

Graph Machine Learning

How to learn mapping function ?

Connection to classical graph algorithms unclear.

- So far treated GNNs as a “new” type of graph algorithm.

- But in reality, graph ML has deep connections to the theory of computer science

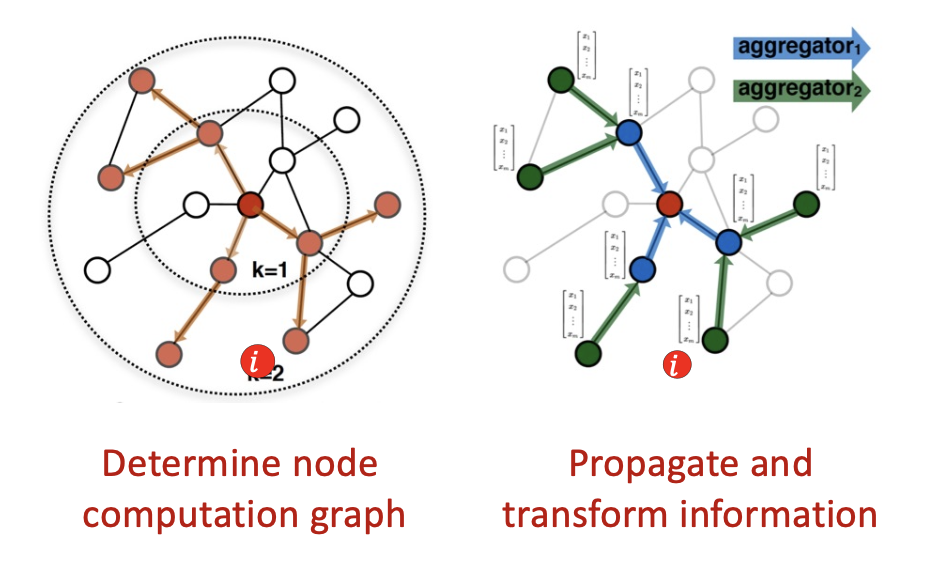

Graph Neural Networks

- GNNs defined by computation process

- I.e., how information is propagated across the graph to compute node embeddings

GNNs as graph algorithms

- We define “message passing” a computational process

- Message passing defines a class of algorithms on graphs

- But it’s not clear what algorithm(s)

- A clue to get started: We have already seen one algorithm GNNs can express…

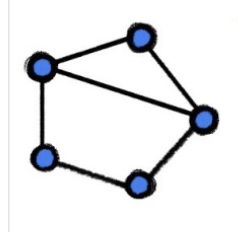

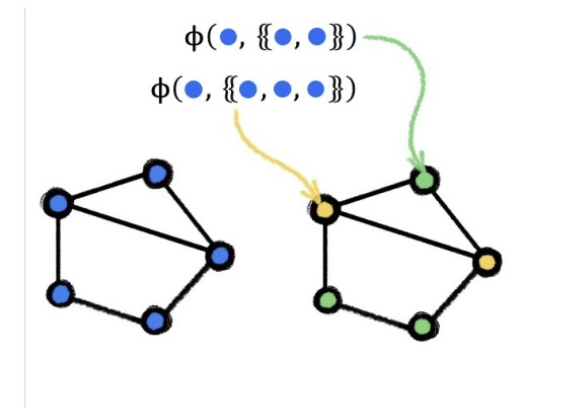

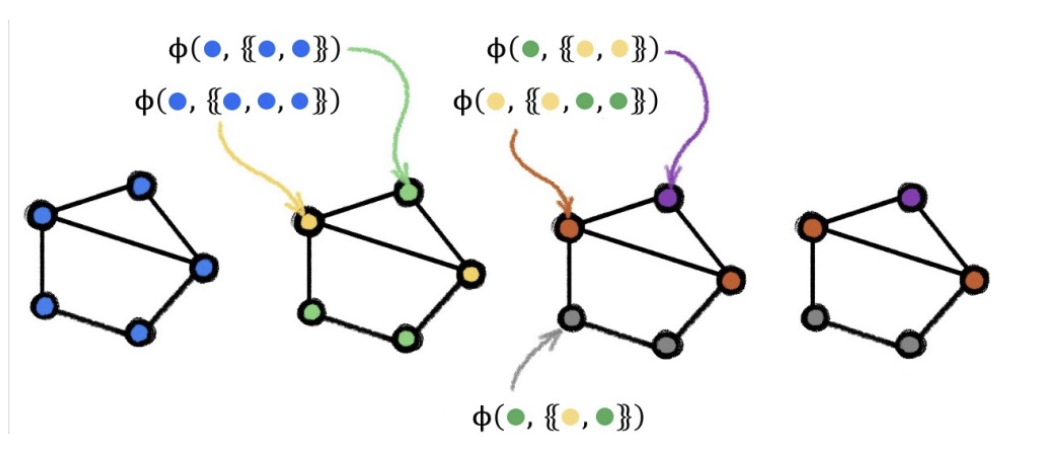

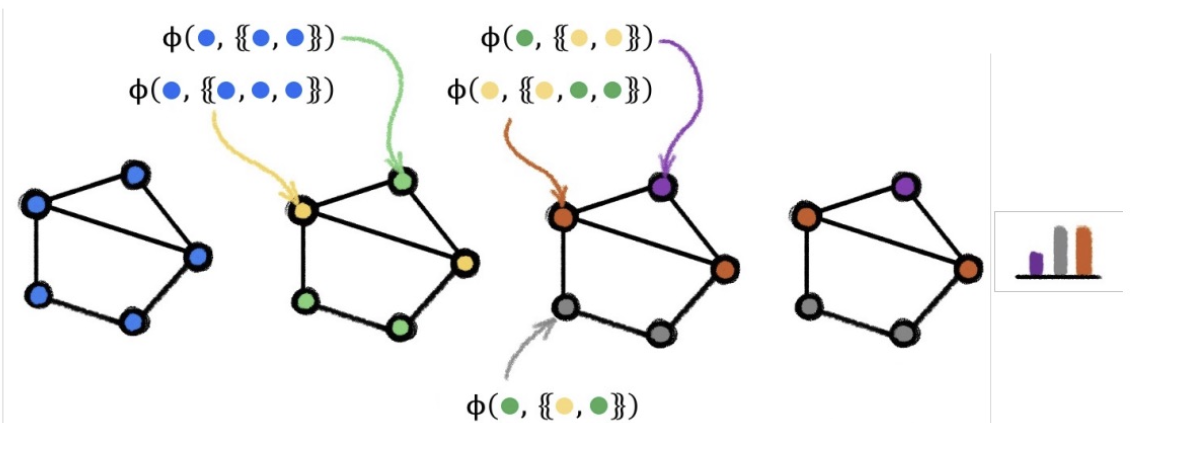

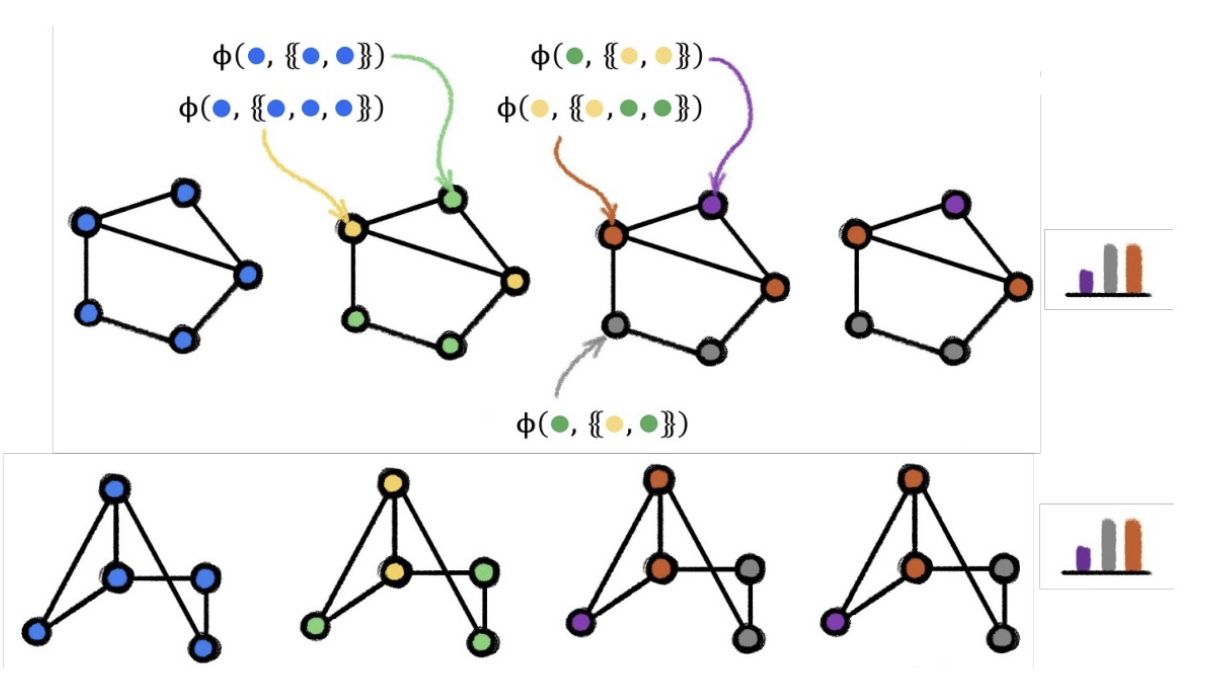

GNNs and the Weisfeiler-Lehman Isomorphism Test

- Simple test for testing if two graphs are the same:

- Assign each node a “color”

- Randomly hash neighbor colors until stable coloring obtained

- Read out the final color histogram

- Declare two graphs:

- Non-isomorphic if final color histograms differ

- Test inconclusive otherwise ( i.e., we do not know for sure that two graphs are isomorphic if the counts are the same)

- Running the test…

- We have seen GIN is as expressive as the 1-WL test

- i.e., Given , the following are equivalent:

- there exist parameters s.t.

- 1-WL distinguishes ’

- i.e., Given , the following are equivalent:

- GIN is a “neural version” of the 1-WL algorithm

- Replaces HASH function with learnable MLP

- But this does not mean that 1-WL is the only graph algorithm GNNs can simulate.

- An untrained GNN (random MLP = random hash) is close to the 1-WL test.

Algorithmic structure of neural networks

- A neural network architecture defines a learnable computer program

- Key intuition:

- MLPs easily learn smooth functions (e.g., linear, log, exp)

- MLPs bad at learning complex function (e.g., sums of smooth functions - i.e., for-loops)

- Approach: define progressively more complex algorithmic problems, and corresponding neural net architectures capable of solving each.

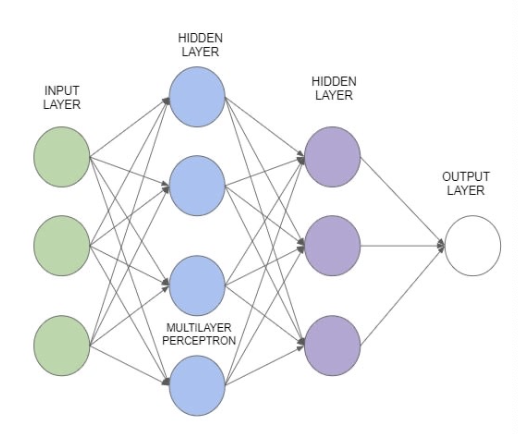

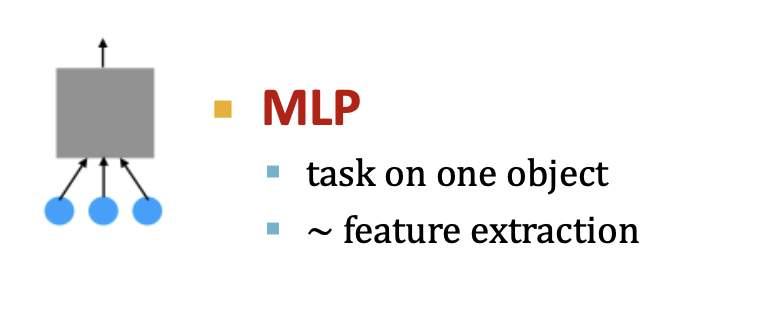

Neural Nets and Algorithm Structure

- Problem 1 (feature extraction):

- Input: “flat” features (e.g., color, size, position)

- Output: scalar value (e.g., is it round and yellow?)

- No other prior knowledge (minimal assumptions)

- Q: What neural network choice suits this problem?

- A: MLPs (multilayer perceptrons)

- Universal approximator

- Makes no assumptions on input/output structure.

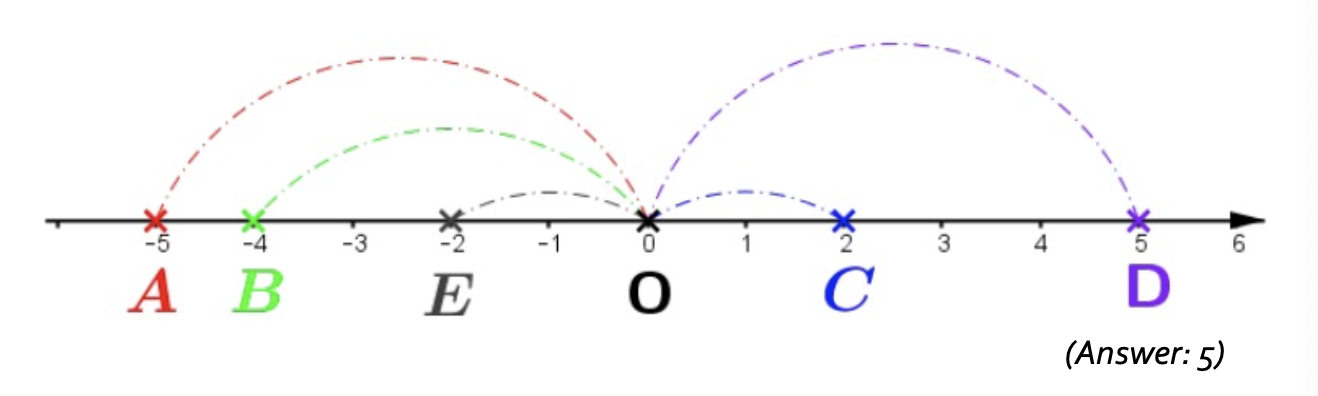

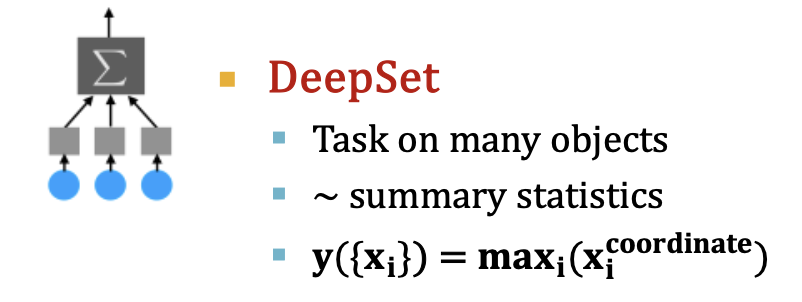

- Problem 2 (summary statistics):

- Input: a set of objects , each with features containing their coordinate and color

- Task Output: some aggregate property of the set (e.g., largest x-coordinate)

-

- MLP model:

- Not well suited to this task

- To learn max (and min) MLP has to learn to execute a for-loop

- This is a complex operation, MLP needs lots of data to learn

- New Deepset model:

-

- Well suited to this task

- Can approxmator softmax, a simple approx. to min/max

-

- Key points:

- Consequence: MLPs only must learn simple functions (log/exp)

- This can be done easily, without needing much data

- MLP can provably also learn this. But must learn complex for-loop, which requires lots of training data

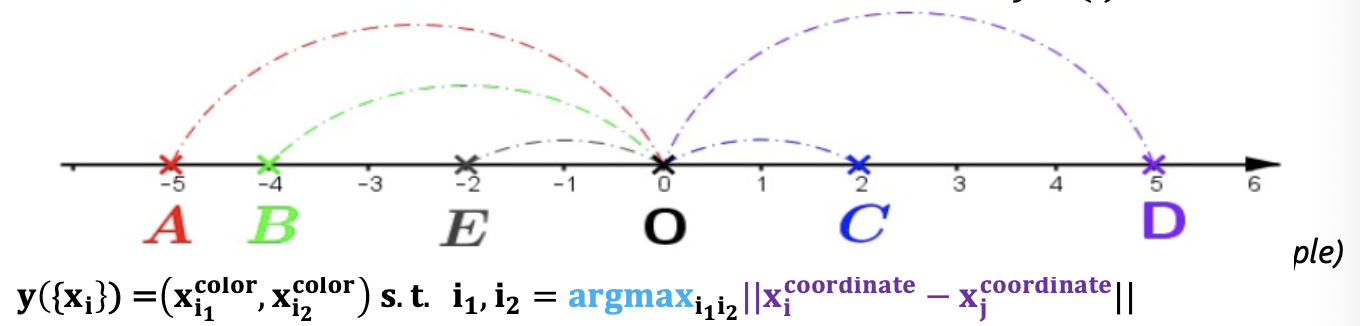

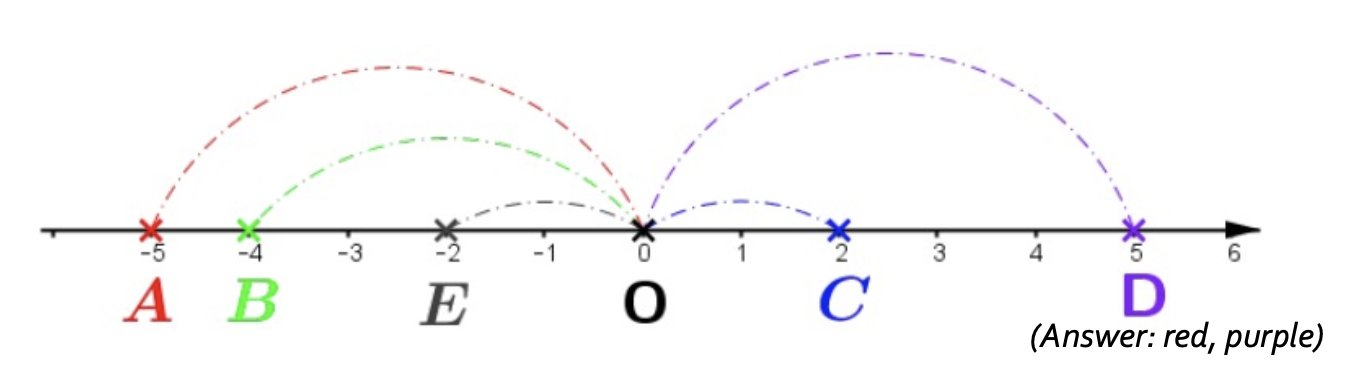

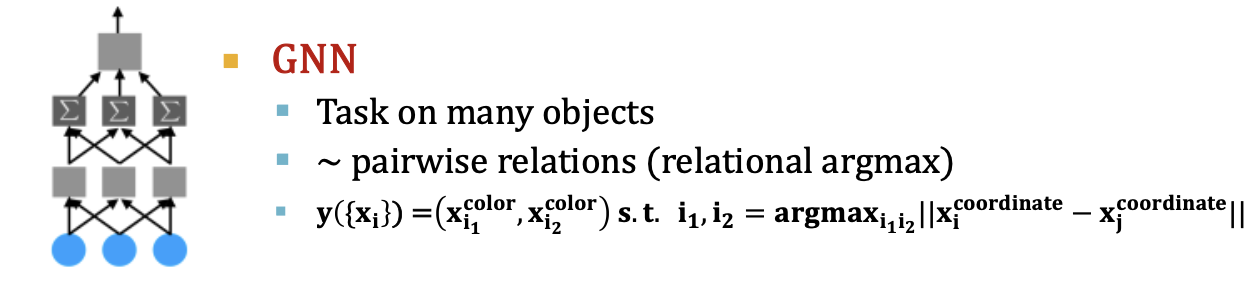

- Problem 3 (relational argmax)

- Input: a set of objects , each with features containing their coordinate and color

- Task Output: property of pairwise relation (e.g., what are the colors of the two furthest away objects?)

- s.t.,

- DeepSet poorly suited to modelling pairwise relations

- Recall:

- Reason:

- task requires comparing pairs of objects - i.e., a for-loop

- each object processed independently by

- Consequence: has to learn complex for-loop (hard)

- provably cannot learn pairwise relations Theorem: Suppose if and only if . Then there is no such that

- GNN well suited to this task: for-loop is built in!

- E.g., recall GIN update

- For

-

General algorithm class for GNN?

- GNNs are good at solving tasks that require relating pairs of objects (nodes)

- MLPs/DeepSets cannot do this easily since they have to learn for-loop

- “Relational argmax” is just one problem that GNN can solve….

Algorithmic Class of GNNs

Dynamic Programming

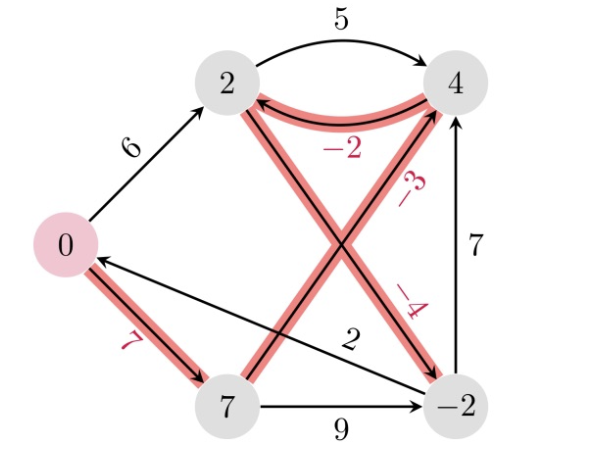

- Task 4 (shortest path):

- Input: a weighted graph and a chosen source node

- Output: all shortest paths out of source node (shortest path tree)

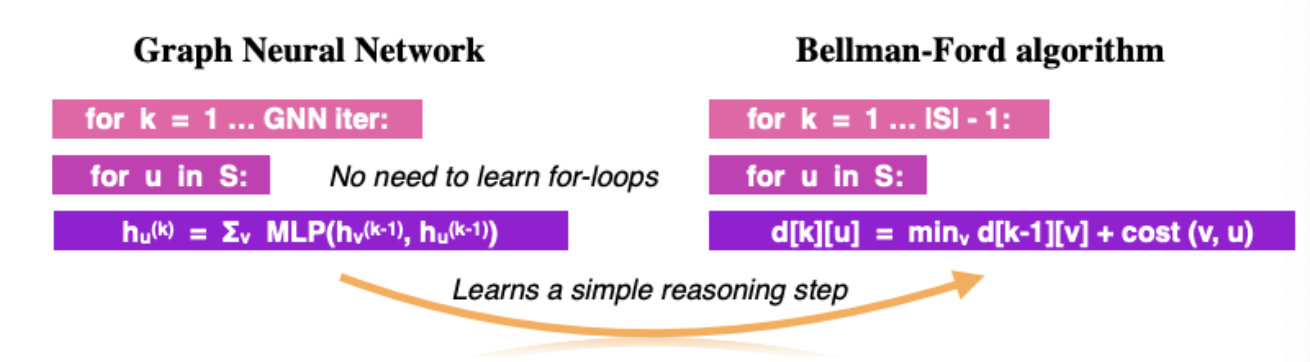

GNN are Dynamic Programs

- Dynamic programming has very similar form to GNN

- Both have nested for-loops over:

- Number of GNN layers / iterations of BF

- Each node in graph

- GNN aggregation + MLP only needs to learn sum + min

- An MLP trying to learn a DP has to learn double-nested for loop (really hard to do)

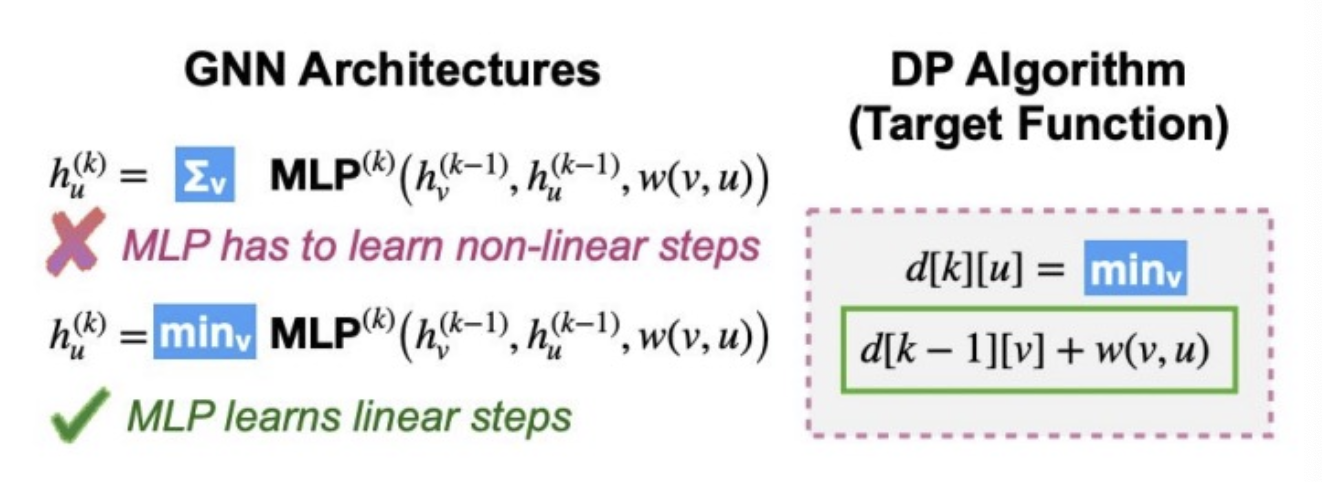

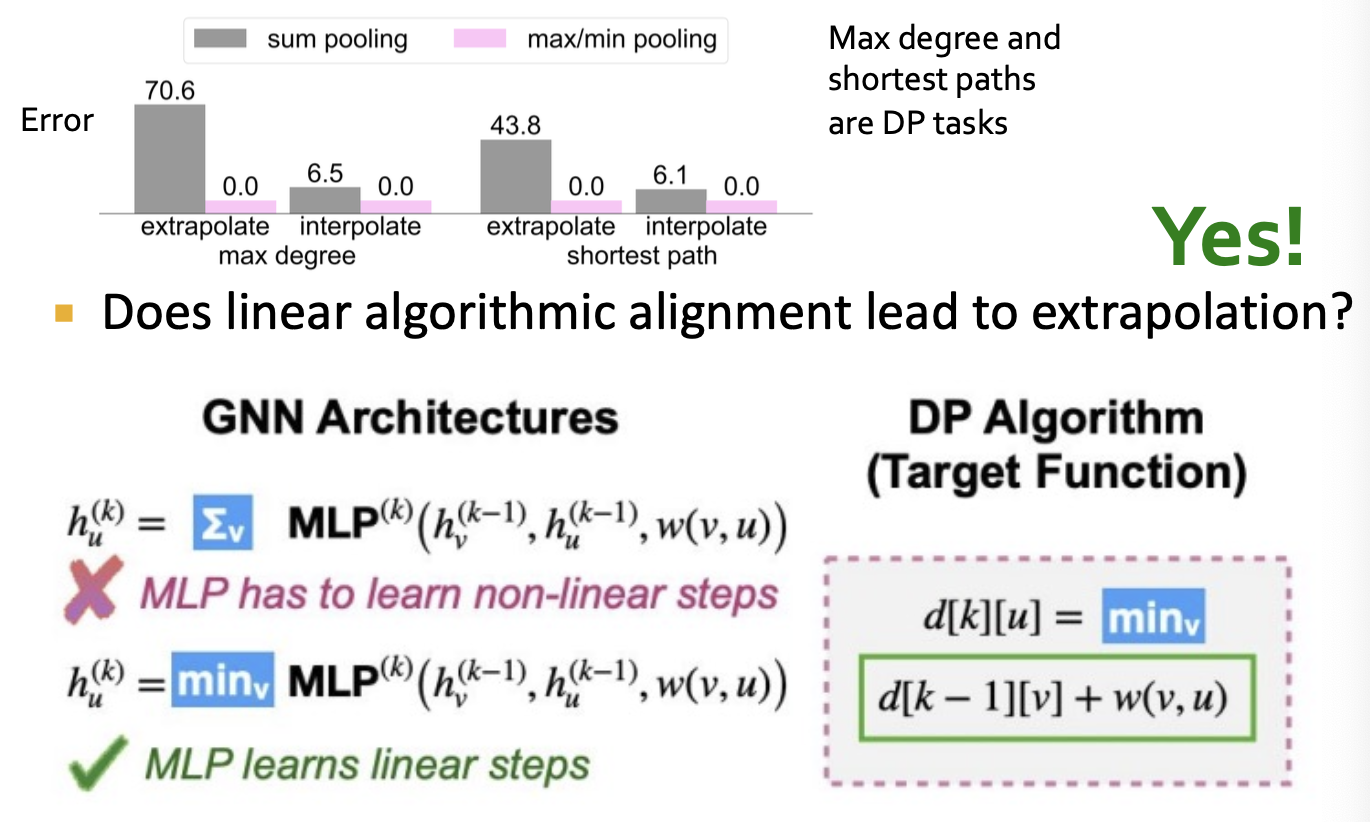

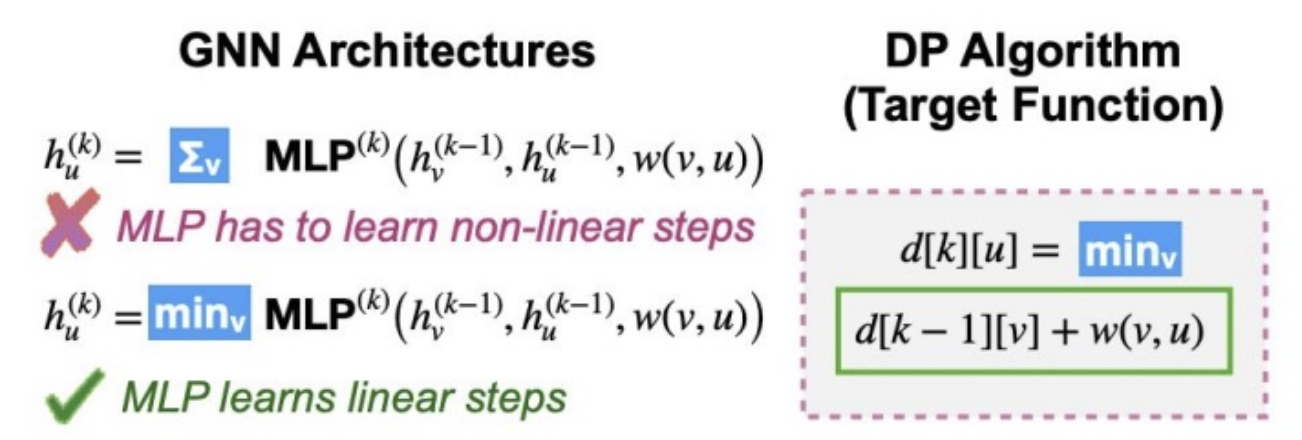

- There is an even better choice of GNN..

- Choose min activation to match DP

- Then MLP only needs to learn linear function!

Algorithmic Alignment

Algorithmic-Centric Principle For Neural Network Design

- In the previous section we studied what type of tasks GNN excel at solving

- Key idea: Focus on the algorithm that solves the tasks

- If the neural net can express the algorithm easily, then it’s a good choice of architecture.

Algorithmic Alignment

Given a target algorithm , a neural network architecture if:

- a simple function

- can express

- Each has few learnable parameters. (so can learn easily)

Designing New Neural Nets with Algorithmic Alignment

- GNN is algorithmically aligned to dynamic programming (DP)

- But algorithmic alignment is a general principle for designing neural network architectures

- So we should be able to use it to design entirely new neural networks given a particular problem

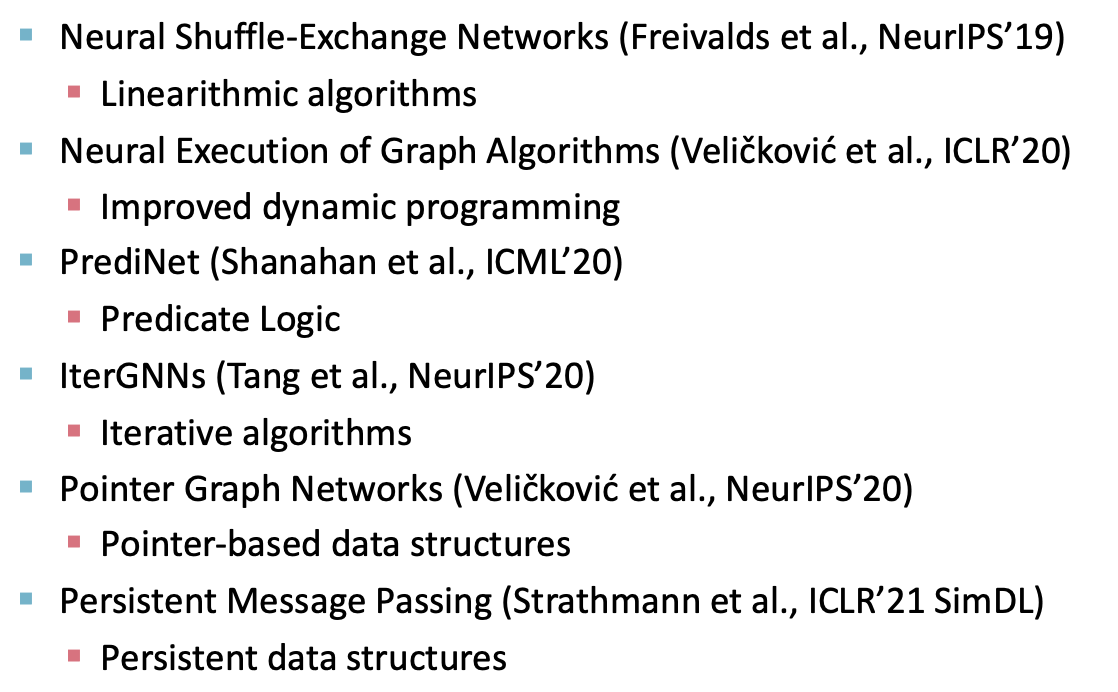

- Many successful example of this in this

Applications of Algorithmic Alignment

- Application 1: building a network to solve a new task

- The subset-sum problem (NP-hard)

- Application 2: building neural networks that can generalize out-of-distribution

- The linear algorithmic alignment hypothesis

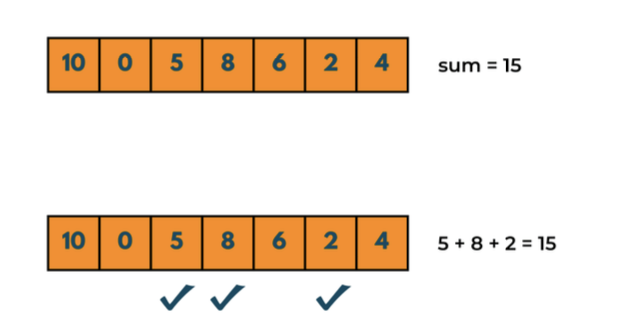

Solving an NP-hard Task: Subset Sum

- Task: given a set of number , decide if there exists a subset that sums to .

- Known to be NP-hard, no DP algorithm can solve this (so GNN not suitable)

- Exhaustive Search Algorithm for solving subset sum:

- Loop over all subset and check is sum is

- Clearly not polynomial time… but can it inspire a neural net architecture?

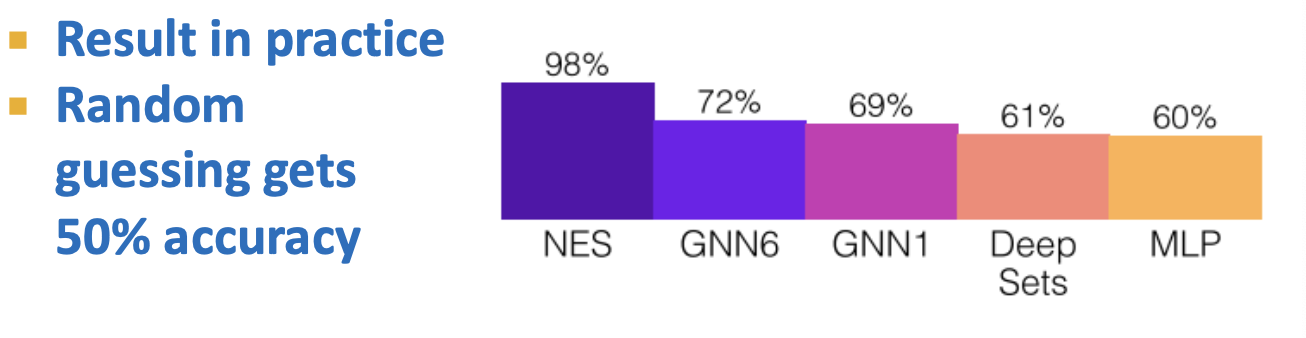

- Neural Exhaustive Search:

- Given ,

-

- Algorithmically aligned to exhaustive search:

- LSTM learns if the sum (simple function)

- Max aggregation identifies best subset

- MLP maps to true/false value

- Algorithmically aligned to exhaustive search:

Algorithmic Alignment and Extrapolation

- We have argued that algorithmic alignment can help inspire architectures well suited to particular tasks

- By well suited, we mean generalizes well using little training data

- But true AI requires something stronger than this…

- Also needs to “extrapolate” to instances that look very different from the training data

- Extrapolation is also called out-of-distribution generalization

- Extrapolation is a holy grail of AI, necessary for systems to behave reliably in unforeseen future situations

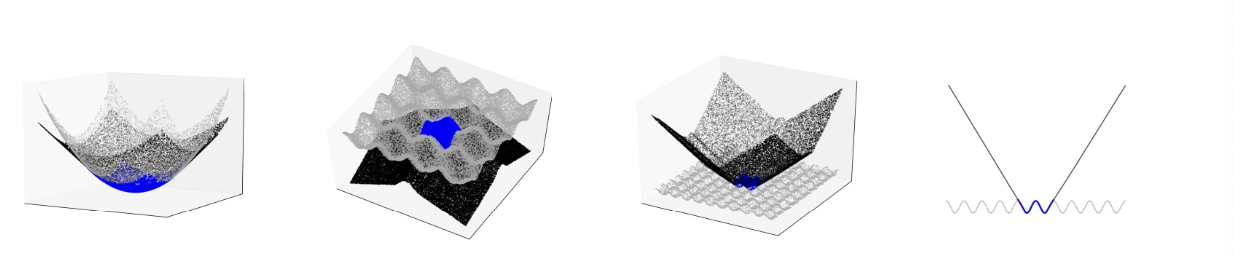

How MLPs extrapolate

- Observation: ReLU MLPs extrapolate linearly

- Can be proved that extrapolation is perfect for linear target functions

- But ReLU MLPs cannot generalize for non-linear target functions…

- The need for linearity for MLP extrapolation suggests a hypothesis for GNN extrapolation…

The Linear Algorithmic Alignment Hypothesis

- Linear Algorithmic Alignment Hypothesis

Linear algorithmic alignment implies a neural network can extrapolate to unseen data

- Linear Algorithmic Alignment

Given a target algorithm , a neural network architecture linearly aligns if:

- can express

- contains a combination of non-linearities and MLPs

- Each MLP in only has to learn a linear map to perfectly fit

How GNNs extrapolate

- Recall GNN for learning dynamic programs

- GNN aggregation function is key

- Min aggregation is linearly algorithmically aligned

- Sum aggregation is not

- Does linear algorithmic alignment lead to extrapolation?